Let's start with the following sales table (same as before, products A-Z, etc):

CREATE TABLE salesdata (

product VARCHAR(1),

customerno INTEGER,

market_area INTEGER,

day DATE,

quantity INTEGER);

By using the salesdata table, we create a new table salesdata_prod_cust_pair that summarizes sales for each product-customer pair within a season.

CREATE TABLE salesdata_prod_cust_pair (

product VARCHAR(1),

customerno INTEGER,

market_area INTEGER,

first_day_overall DATE,

first_day_season DATE,

next_day DATE,

count_season INTEGER,

quantity_season INTEGER,

new_customer_flag INTEGER,

use_again_flag INTEGER,

use_again_days_between INTEGER);

The following SQL populates the table:

/* Explore season 2007-Q1 */

INSERT INTO salesdata_prod_cust_pair

SELECT s.product, s.customerno, s.market_area,

MIN(overall.min_day) overall_first_day,

MIN(s.day) seasonal_first_day,

MIN(seasonal_next.next_day) next_day,

COUNT(*) count_season,

SUM(s.quantity) quantity_season,

1-SIGN(DATEDIFF(MIN(s.day),MIN(overall.min_day))) new_customer_flag,

SIGN(DATEDIFF(MIN(seasonal_next.next_day),MIN(s.day))) use_again_flag,

DATEDIFF(MIN(seasonal_next.next_day),MIN(s.day)) use_again_days_between

FROM salesdata s

LEFT OUTER JOIN(

SELECT nxt.product, nxt.customerno, MIN(nxt.day) next_day

FROM salesdata nxt,

(SELECT product, customerno, MIN(day) min_day

FROM salesdata

WHERE day >= '2007-01-01'

GROUP BY product, customerno) q

WHERE nxt.customerno = q.customerno

AND nxt.product = q.product

AND nxt.day > q.min_day

GROUP BY nxt.product, nxt.customerno) seasonal_next

ON s.product = seasonal_next.product

AND s.customerno = seasonal_next.customerno,

(SELECT product, customerno, MIN(day) min_day

FROM salesdata

GROUP BY product, customerno) overall

WHERE s.product = overall.product

AND s.customerno = overall.customerno

AND s.day BETWEEN '2007-01-01' AND '2007-03-31'

GROUP BY s.product, s.customerno, s.market_area;

Please note that you must change red texts according to your database. DATEDIFF() returns days between two dates. 2007-01-01 and 2007-03-31 specify the season to explore; in this case 2007-Q1.

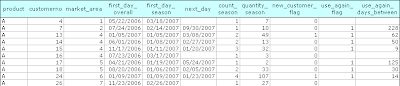

Here's some data:

As you can see:

- Product A was sold to Customer 4 first time in May 2006, so C-4 is not a new customer during season 2007-Q1, therefore new_customer_flag = 0.

- Product A was sold to Customer 13 first time on January 5th, 2007, so C-13 is a new customer during season 2007-Q1, thus new_customer_flag = 1. This customer used the product/service again on March 8th, so use_again_flag = 1 and use_again_days_between = 62.

OK. This was only the first part. By using the new salesdata_prod_cust_pair table, you can write in SQL:

SELECT product,

SUM(quantity_season) "Total quantity of items sold",

100*SUM(new_customer_flag)/COUNT(*) "% new customers in this season",

100*SUM(use_again_flag*new_customer_flag)/SUM(new_customer_flag) "% willingness to use product/service again",

AVG(use_again_days_between/new_customer_flag) "Average days before using

product/service again"

FROM salesdata_prod_cust_pair

GROUP BY product;

.. and get the following results:

See the last two columns! For example the willingness to use product/service again is an excellent indicator to monitor how much your customers trust your products.

By using an XY chart you can visualize the data:

Let's analyze:

- There must be some problem in products U and B. Marketing might be ok (42% new customers), but once used the first time, only 10 % of customers are willing to use the service again.

- The product C is in the other extreme. When customers use it, they probably love to use it again. Perhaps the teams responsible for products U and B can learn something from the team C?

I think I'm done with the sales data for now. Next time, something else.

1 comment:

Hello,

i only want to thank you for that good article. It helped me to implement such a analysis into my work.

Post a Comment